In the workplace we are constantly receiving requests from colleagues, and the more senior we get, the higher the demand for us. That’s natural and expected as we progress in our carrers and take on leadership roles.

The way we address these requests has a direct impact on how we are perceived within the company and in the collaborative work environment we want to foster. I see them as opportunities to exercise communication and leadership skills, share knowledge, give feedback and positively impact my team and the business.

Let’s take a look at how we can approach requests effectively, how seniority plays a role and analyze a few illustrative examples.

Guidelines

In a nutshell, our communication in these situations should be driven by the following guidelines:

- Employ empathy and nonviolent communication

- Promote a cooperative, trusting, and supportive environment

- Empower colleagues’ autonomy

- Advance colleagues’ technical skills

- Focus on results

Before responding to a request, reflect on whether your communication meets most of the above criteria, and whether it doesn’t directly go against any one of them. If your communication is aligned with this, proceed with confidence.

Notice I deliberately highlighted ‘autonomy’, since I believe it plays a central role. In this context, it means that we should encourage our colleagues to seek solutions to their requests as autonomously as possible. It’s like the old saying: ‘Give a man a fish, and you feed him for a day; teach a man to fish, and you feed him for a lifetime’ (even though sometimes we’ll have no option other than give the fish - due to short deadlines, lack of experience of the courterpat, etc).

Seniority

Before heading to examples, a quick note on how the seniority level from our counterpart affects the way we communicate.

Junior-level colleagues require more care and attention in communication, as they are in an early stage of professional development and need the consideration and support of more senior colleagues to help them grow. Positive and constructive feedback should be given frequently, and we should push them out of their comfort zone, but in a healthy and considerate way.

In senior-to-senior relationships I encourage some friction. We need to have the courage to be honest with each other without beating around the bush, and trust in the maturity of our colleagues to receive feedback that is less polished, but constructive and insightful. This can speed things up and foster a more dynamic environment.

Examples

To illustrate below are examples of typical reponses we often give. The first sentence in red shows an inadequate response according to the guidelines. The following sentence in green shows a more appropriate response. It is important to note that there isn’t only one way to act in each situation, and depending on your background and leadership style, your communication will vary. Additionally, keep in mind that we’re examining scenarios in which junior professionals seek assistance from their senior counterparts.

A)

“Hi Alice, I don't have time to help you, I'm too busy.”

This is a cold response, if you reply like this a lot then you’re not making yourself available to your colleagues, creating the perception that the door to collaboration is closed.

“Hi Alice, unfortunately I'm very short on time today due to a priority activity I need to finish by the day's end. Is this urgent, or can we come back to this topic tomorrow? You can also check if Bill is available. Please keep me informed either way.”

This response shows adequate empathy, as it not only provides insight into your own situation but also expresses genuine interest in understanding the urgency behind the request. It further demonstrates cooperation by suggesting a schedule for assistance, possibly the next day. It also indicates alternative ways, such as exploring the availability of another colleague, possibly familiar with the subject, who could provide assistance.

B)

“Bob, you should be able to do this by yourself. Try harder”

This can be characterized as a harsh response, especially for people at a more junior level, as, it potentially brings an unfavorable judgment of the person’s ability, and closes the door to collaboration.

“Bob, kindly share what you've attempted so far, along with any unsuccessful attempts. This will help me grasp the context and offer more tailored guidance.”

This response aims to comprehend prior efforts through clear and well-crafted communication, naturally fostering the culture of autonomy valued within the company. It conveys the expectation that individuals have made an effort to address the issue independently before seeking assistance.

C)

“Hi Alice, look, I've explained this to you several times, do some research.”

Again, an example of a harsh response. Even though a subject may have been already discussed in the past, it’s essential to maintain an environment of trust and companionship by employing a more thoughtful communication.

“Hi Alice, I recall encountering this issue on multiple occasions in the past, and I believe that by revisiting our previous solutions, we can resolve it effectively. Please consider retrieving the approach we took when dealing with a similar situation, such as 'XYZ'. If you have any questions, feel free to reach out. I'm here to assist.”

Here, we assume an honest oversight without passing judgment, recognizing that we can sometimes struggle to recall past situations and apply them to new ones. With time and guidance, we can enhance this skill and progress. This presents a valuable opportunity to support a colleague’s technical development and foster their autonomy.

D)

“Bob, don't worry, I'll take a look and solve this problem by myself, I think it will be faster. I'll let you know when I'm done.”

At first glance, this response appears cooperative and results-oriented. However, it contradicts the autonomy guideline by depriving the individual who sought help of the opportunity to learn and grow from the challenge. This approach can inadvertently foster dependency, as the person may not acquire the skills to handle similar situations independently in the future.

Unless there’s a good reason to ‘hijack’ the problem, for instance due to a short deadline, the following approach is recommended:

“Bob, this is a very interesting problem. Let's discuss it, and I'll assist you in finding a solution. I anticipate it might arise in future scenarios, making it crucial to solidify this learning.”

Now we see the clear mentoring approach, emphasizing the development of colleagues and a forward-looking perspective.

Closing thoughts

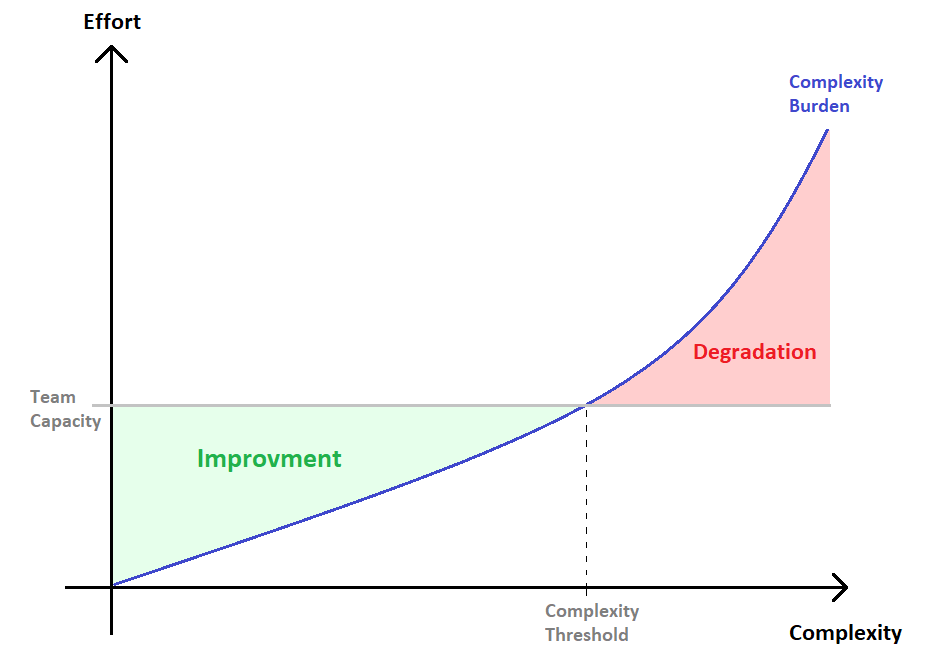

The more senior a team member becomes, the higher the expectation for them to have a positive impact on the team and subsequently in the business, inspiring and pushing their colleagues beyond their comfort zones, and thus earning recognition as a leader among peers.

It’s far from easy; in fact, it’s quite challenging to assume the responsibilities of mentoring and take a prominent role within the team. Effective time management will be essential to strike a balance between safeguarding our personal time and remaining accessible to the team. This means prioritizing tasks, setting clear boundaries, and ensuring that our schedule allows for both focused work and availability to assist our colleagues. By efficiently managing our time, we can fulfill our leadership roles without becoming overwhelmed or unavailable when needed.

The leadership challenge, whether in a managerial or technical role, is substantial, but the personal growth it brings is truly worth the effort.