I have been using AI to help me code (and actually to help me do anything) for a couple of years now. More and more I keep seeing how it boosts my productivity.

When programming, I keep code suggestions minimal in the IDE and really like the chat interface for tackling coding tasks. Writing down the problem, its constraints, and the task goals in a structured way has, in my experience, shown really great results and visibly boosted my productivity. As a bonus, it has positively influenced the way I communicate about technical challenges with colleagues.

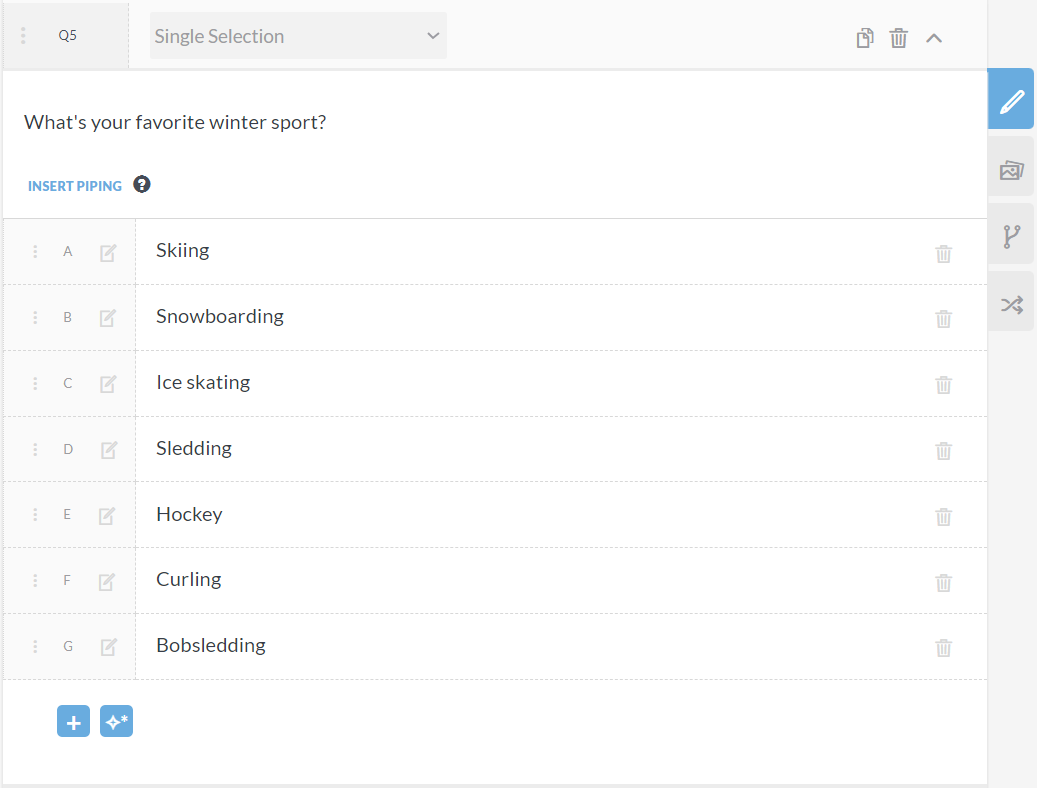

So today, I saved some time to share a simple example — the anecdotal evidence. My task was to implement the following feature in a section of our app:

- Automatically compress uploaded images

It took 25 minutes from start to finish. If I hadn’t used AI, I estimate that it would have taken me about 2 hours to search references, write down the code, test, and review before finishing this task.

👉 That’s roughly a 5x speed-up in this particular case.

Here’s a quick overview of how I structured my workflow using ChatGPT (GPT-4o model):

- Define the Problem: I started by clearly defining my task, which was to compress uploaded images automatically in two user flows.

- Generate Code: I asked ChatGPT to create a method for compressing images to high-quality JPEG, providing contextual code from my existing project.

- Integrate Changes: Next, I requested ChatGPT to modify two existing image upload methods, incorporating the new compression method.

- Create Unit Tests: Lastly, I asked ChatGPT to write a unit test for the new compression functionality following existing testing styles in the project.

Let’s take a deep dive.

The Workflow in Detail

Initially, I reviewed the source code to understand the user flows I needed to change and to locate the image upload entry points.

After getting familiar with the codebase, I concluded that the project didn’t yet define a method to compress images to JPEG. I also found an ImageExtensions class that contained several methods for performing operations on images (e.g., Rotate, Resize, Generate thumbnail, etc.), which I decided was the right place to define the new compression method.

So, in my first prompt to ChatGPT, I asked it to create the compression method, passing along additional contextual information to ensure the generated code would be compatible with my project:

In its response ChatGPT correctly produced the following method (I’m omitting additional information and the usage sample also returned by ChatGPT):

I skimmed through the code, and it seemed to implement everything I requested, so I copied and pasted the generated code into my project’s ImageExtensions class.

I continued by asking ChatGPT to adjust two of my application user flows to use the new method, with additional considerations:

As a result, both methods were modified as requested, and ChatGPT proposed a helper method IsUncompressedImage to be used by both methods in accordance with the DRY (“don’t repeat yourself”) principle.

Here’s the final code generated by ChatGPT for one of the methods after the introduced changes:

Again, I reviewed the code and was happy with the proposed changes. After pasting it into my application code, everything compiled without any issues.

Finally, before closing the code changes, I wanted to create a unit test for the new CompressToJpeg method, so I sent another message to ChatGPT to create the test method analogous to another test in my project:

And voilà, the unit test was correctly created according to my request:

After pasting the new test method into my test class, I was able to quickly run it and confirm that it passed 🟢.

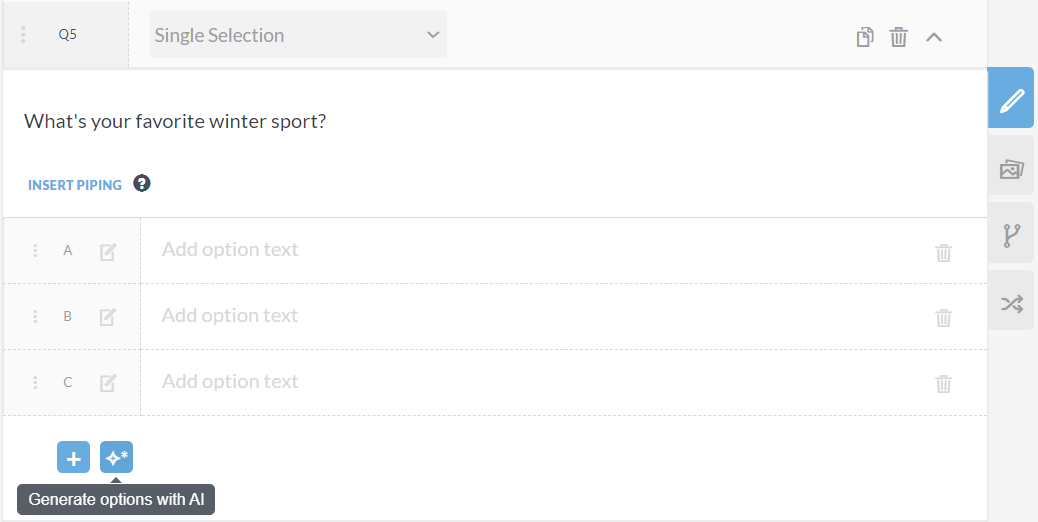

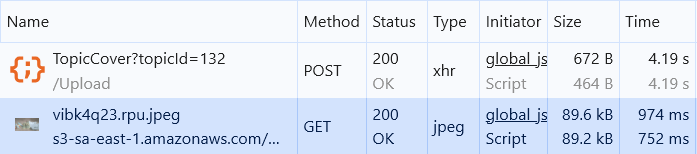

Once I finished the code changes, I performed a manual test to validate that the task was completed:

Success!

Wrapping Up

This was just one of many examples where AI tools can turbocharge the time it takes to complete manual code structuring and fiddly tasks. This task was a great candidate because it was mostly digital plumbing, relying on high-level operations (like JPEG compression) already provided by the underlying framework.

Several studies try to capture how much productivity AI tools can bring. At the end of the day, it all depends on how frequently and how well you use them in your workflows.

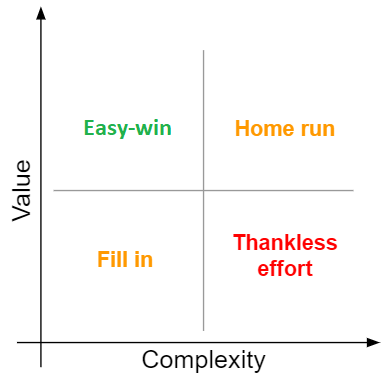

As with anything, usage brings experience, and we become better at identifying the best situations and strategies for extracting productivity from these tools. A naive approach of blindly using AI in everything may backfire.

My complete conversation with ChatGPT can be accessed in the link below: