I walked into a budget meeting expecting the usual spreadsheet tour: what went up, what went down, and where we should trim next year. We did that. But in the middle of the call, something else surfaced: we were debating how decisions should happen, not just what the numbers were.

Someone suggested a simple rule: any spend above a threshold should go to a committee for approval. It was well-intentioned, practical, and it looked good on paper.

My first reaction was: this won’t work.

Not because the threshold was too high or too low. But because the framing was off. It pushed strategy down to the operational level. It asked leaders across the company to act like mini-CFOs, to weigh trade-offs they don’t have context for. It created a system that depended on individual judgment instead of a repeatable path.

That’s the opposite of systems thinking.

Systems thinking

Systems thinking means you don’t fix outcomes only by giving people more decisions, you fix outcomes by designing the system that shapes decisions. If you want different behavior, you redesign the structure: the incentives, the sequence, the guardrails.

In practice, that means leadership has to do the hard part: define the path and remove ambiguity. The operation executes within that path.

So instead of telling everyone “spend less,” I prefer to ask: what are the rails that will naturally lead to better spending?

Enabling constraints (the rails)

That’s where enabling constraints come in. They are rules and flows that:

- limit the decision space,

- make the right choice the default,

- without killing autonomy.

In our case, the first draft of the policy was “anything above X needs approval.” The system-thinking version was different:

- First, internalize. If a request is about an external vendor, the first step is always to route it through the internal capability. Only if that path is exhausted does the approval flow even start. The point isn’t “protecting internal teams.” It’s reducing avoidable spend and fragmentation when the capability already exists, and escalating fast when it doesn’t.

- Second, decide in the right moment. The worst time to approve a vendor is when the proposal is already on the table and the deadline is tomorrow. The flow needs to exist upstream, where the decision can be made calmly.

- Third, separate strategy from execution. Teams should focus on delivery and client outcomes. Leadership should define guardrails with the people who run the work and monitor the system.

The difference is subtle but huge. Instead of asking people to make better decisions in chaotic situations, you reshape the process so the right decision is the easy one.

None of this eliminates judgment. It just moves judgment to the right layer: design the default path once, then let the organization run it repeatedly.

What I proposed

I proposed a lightweight “rail system” built around the reality of our operation:

- Define the default path. For certain categories (vendors, external analysis, specialized work), the default is internal first. If it can’t be done internally, the request escalates.

- Make the moment explicit. Add checkpoints inside the workflow where the request must be raised early, not when it’s already late.

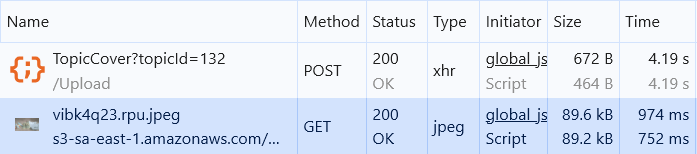

- Keep approvals simple. No ceremonies, no weekly meetings, no bureaucracy. Just clear inputs, fast review, and a decision trail. Clear inputs = scope, deadline, expected outcome, alternatives considered, and a rough cost envelope.

- Define exception paths. Legal/compliance requirements, incident response, hard external deadlines, or unique expertise not available internally. If an exception applies, the flow skips steps, but still leaves a decision trail.

In other words: leadership designs the system; the team follows the rails.

Closing thoughts

This is not about being “tough on costs.” It’s about clarity. If you want a company to scale, you cannot rely on individual heroics or ad-hoc judgments. You need a system that consistently nudges people toward the strategic path.

And building those systems is not easy. You need to identify what should change, understand why the current structure enables the old behavior, visualize alternatives, and think through side effects, incentives, and how to earn buy-in from the people involved. That’s real work.

You know the rails are working when urgent approvals go down, repeat vendors consolidate, and lead time for “yes/no” decisions becomes predictable.

The easy thing is to outsource the problem to leaders who are already constrained in their day-to-day. “Just approve everything” sounds simple, but it’s naive to think that passing the burden downstream will solve the root issue.

Build the rails. Then let the train move fast.